- Cyborg Bytes

- Posts

- DeepSeek isn't a National Security Threat, It's a Threat to the Tech Bro Oligarchy

DeepSeek isn't a National Security Threat, It's a Threat to the Tech Bro Oligarchy

DeepSeek: The AI Powerhouse That’s Freaking Out Silicon Valley

Important Note: This article focuses on the model can run locally on your machine, not the web app. My overarching goal is to convince you to run your own version locally, not use the web app.

There’s a new AI giant in town, and its name is DeepSeek—a next-level artificial intelligence platform developed in China that’s making waves (and not the chill kind). This isn’t just another chatbot or data-crunching tool; DeepSeek is revolutionizing industries with advanced machine learning, automation, and predictive analytics. We’re talking about optimizing supply chains, supercharging financial forecasts, and threatening the very foundations of Big Tech’s dominance.

And guess what? Silicon Valley is in full panic mode. Investors are scrambling, stocks are diving, and tech execs are sweating through their Patagonia vests. Why? Because DeepSeek’s rapid rise isn’t just about innovation—it’s about power. If this AI continues on its trajectory, it could shift the balance of technological influence away from the West, challenging the dominance of U.S. tech giants in ways they never saw coming.

Spyware or Just Geopolitical Panic?

The moment people hear that DeepSeek is a Chinese AI platform, the immediate reaction is: "Wait… is this spyware?"

It’s not paranoia out of nowhere. China’s strict data laws do require companies to share information with the government upon request. And with growing geopolitical tensions, the fear that DeepSeek could be vacuuming up sensitive data for surveillance or economic espionage isn’t exactly far-fetched. Some critics believe this could be another case of covert digital infiltration—like TikTok, but on steroids.

But does this theory actually hold water?

What the Evidence Says

Let’s cut through the noise. Right now, there’s no solid proof that DeepSeek is funneling data straight into the hands of Chinese authorities. The platform operates under global encryption standards, follows internationally accepted data protection protocols, and has already undergone third-party audits by major companies outside of China.

That said, does that mean it’s 100% safe? Not necessarily. But here’s the uncomfortable truth: Most AI platforms—including Western ones—are data black holes. Google, OpenAI, and Meta aren’t exactly beacons of transparency when it comes to how they handle user data. The difference is that Western AI companies aren’t under direct government mandates to share that data with intelligence agencies (at least, not in the same explicit way).

So while DeepSeek isn’t proven to be spyware, the larger issue remains: global tech regulation is a mess. Without clear, international frameworks to control cross-border data sharing, skepticism about foreign AI dominance is inevitable.

The Real Fear: A New World Order in AI

Let’s be real—the panic over DeepSeek isn’t just about security concerns. It’s about power shifts.

For years, Silicon Valley has called the shots in AI development. Now? China is catching up—fast. And DeepSeek isn’t some knockoff competitor; it’s a serious threat to Western AI supremacy. If Chinese tech companies outpace their American counterparts in AI research and adoption, the implications go beyond business. We’re talking about a potential realignment of economic influence, innovation leadership, and even cybersecurity dynamics.

And that? That’s what really keeps U.S. tech leaders up at night.

So, is DeepSeek an incredible AI breakthrough? Yes. Could it be a security risk? Maybe. But more than anything, it’s a wake-up call: the future of AI isn’t guaranteed to be American.

And that changes everything.

Why DeepSeek-V3 Challenges the Status Quo

There’s a quiet revolution shaking up the AI world, and it’s called DeepSeek-V3. Unlike the big-name platforms you know—like OpenAI’s ChatGPT—DeepSeek is open-source, free to use, and runs directly on your computer. No one’s storing your data, monitoring your activity, or deciding what you can and can’t do. It’s privacy and control in a way most platforms can’t (or won’t) offer.

Yet, DeepSeek is being painted as a national security threat. Why? Because it disrupts the current AI business model, where profit-driven companies dominate by locking you into their systems, harvesting your data, and charging a premium for access. DeepSeek flips this power dynamic, putting tools directly in users' hands—and Big Tech doesn’t like that.

This article breaks down why DeepSeek isn’t the threat it’s made out to be—and why the real risks come from platforms working to shut it down.

We’ll explore how DeepSeek-V3 prioritizes privacy, outpaces its competitors in sustainability, and exposes the systemic flaws of centralized AI.

What Sets DeepSeek-V3 Apart?

DeepSeek-V3’s Key Features

DeepSeek-V3 offers something revolutionary in AI: local-first, privacy-centered technology that’s also completely free. While OpenAI requires a connection to its servers, DeepSeek-V3 runs directly on your device, keeping your data safe from prying eyes. Its open-source backend allows anyone to audit the system, ensuring transparency and accountability.

It’s a system built for everyone—from developers who want full control to everyday users wary of being surveilled.

Addressing Its Limitations

No tool is perfect. DeepSeek’s refusal to answer certain politically sensitive questions (like about Tiananmen Square) has drawn criticism, and its frontend remains closed-source. These limitations don’t overshadow its broader mission of democratizing AI, but they’re worth noting.

Why It’s Revolutionary

At its core, DeepSeek-V3 is more than just an alternative to platforms like OpenAI—it’s a paradigm shift. By decentralizing control and making AI tools accessible to anyone, DeepSeek challenges the tech bro oligarchy, proving that cutting-edge technology can exist without surveillance or paywalls.

OpenAI vs. DeepSeek: A Head-to-Head Comparison

Transparency: Open Source vs. Closed Source

OpenAI operates in a walled garden. Its code is closed-source, and users can only speculate about how their data is processed or stored. In contrast, DeepSeek-V3 invites scrutiny with its open-source backend. It’s not just a matter of trust—it’s proof.

Privacy: Local vs. Cloud-Based Processing

OpenAI sends every query to its centralized servers, where user data is processed and potentially stored. DeepSeek-V3 flips this script by running locally on your machine, ensuring your sensitive information stays with you.

Cost: Free Forever vs. Subscription Fees

OpenAI charges $20–$200 per month for its tools, locking advanced features behind a paywall. DeepSeek? It’s entirely free. If you have the hardware, you have access.

Performance and Innovation

Despite its decentralized design, DeepSeek competes neck-and-neck with OpenAI on benchmarks, proving you don’t need billion-dollar infrastructure to deliver top-tier results. OpenAI relies on wildly expensive NVIDIA GPUs housed in energy-intensive data centers, while DeepSeek uses repurposed crypto mining hardware and lightweight systems to deliver a leaner, greener solution.

Privacy and National Security Risks—The RMF Analysis

Breaking Down the Risks

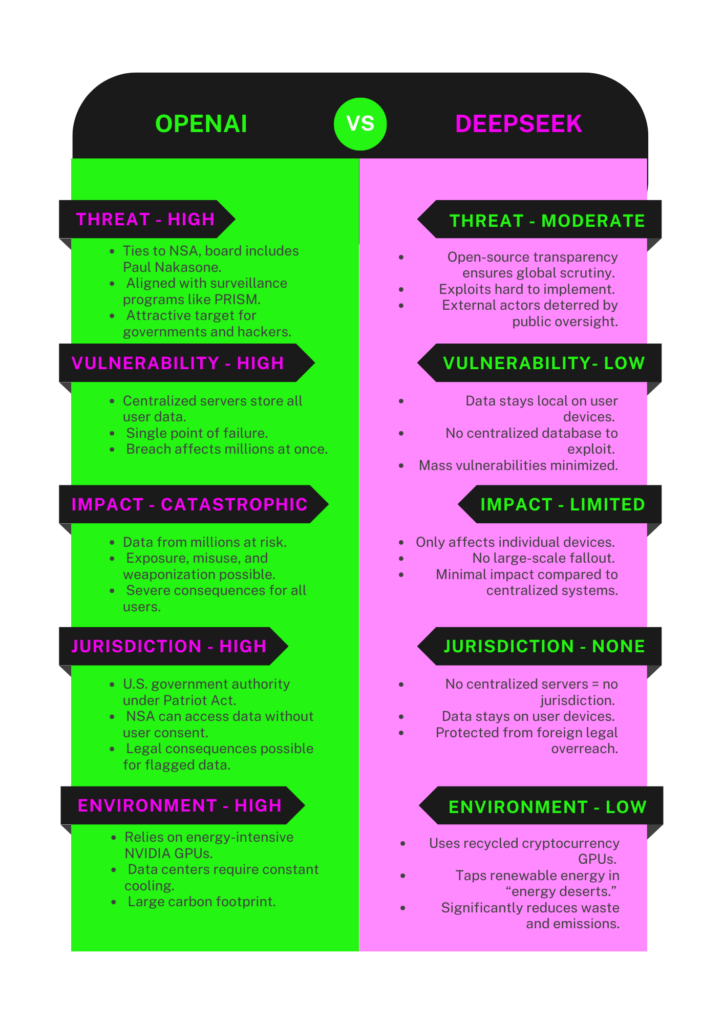

Cybersecurity experts use the Risk Management Framework (RMF) to evaluate threats, vulnerabilities, and impacts systematically. Let’s see how OpenAI and DeepSeek measure up using this method.

OpenAI: Centralized and Exposed

OpenAI’s closed-source model and centralized data storage make it a prime target for breaches and government overreach. With figures like former NSA director Paul Nakasone on its board, OpenAI’s potential for surveillance is more than theoretical.

Risk Analysis:

Threat: High. The NSA’s history with programs like PRISM shows a clear precedent.

Vulnerability: High. Centralized servers are a single point of failure.

Impact: Catastrophic. Millions of users’ data could be exposed or misused.

DeepSeek-V3: Decentralized and Transparent

DeepSeek-V3’s open-source model and local-first design minimize vulnerabilities. By keeping data on individual devices, it eliminates centralized targets and prevents mass exploitation.

Risk Analysis:

Threat: Moderate. Malicious actors might target the code, but global scrutiny makes hidden exploits unlikely.

Vulnerability: Low. Data stays local, reducing systemic risks.

Impact: Limited. Breaches would only affect individual devices, not an entire network.

Comparison Chart: OpenAI vs. DeepSeek-V3 Risk Analysis

Risk Factor | OpenAI (NSA Connection) | DeepSeek (Open-Source Transparency) |

|---|---|---|

Threat Likelihood | High: The NSA’s history of mass data collection programs like PRISM makes OpenAI a significant concern. | Moderate: Malicious actors might try to exploit open-source code, but global scrutiny reduces the chances. |

Vulnerability | High: Centralized servers make OpenAI a prime target for breaches and misuse. | Low: Local operation ensures data never leaves your device, reducing exposure. |

Impact of Breach | Catastrophic: A breach could expose sensitive information from millions of users in a centralized database. | Limited: A breach affects only individual devices, not a centralized user base. |

This streamlined comparison shows how OpenAI’s centralized model amplifies risks, while DeepSeek’s decentralized and transparent design minimizes them.

Who’s Watching You? The NSA Can Jail You; China Can’t

Jurisdiction matters. It’s not just about who could access your data but who can legally act on it.

The NSA’s Reach Through OpenAI

OpenAI processes all data on U.S. servers, placing it squarely under NSA jurisdiction. Through laws like the Patriot Act, the NSA can demand access to your data without your knowledge. If flagged, even innocuous queries could escalate to legal trouble. The centralized nature of OpenAI’s servers makes this risk even more pronounced.

DeepSeek-V3’s Shield Against Foreign Influence

DeepSeek-V3’s decentralized design means your data never leaves your device. Even if bad actors like the Chinese government attempted interference, they lack the legal reach to act against individual users outside their borders. DeepSeek’s local-first approach further limits the scope of potential breaches.

Jurisdiction: The Ultimate Power Play

Risk Factor | NSA via OpenAI | Chinese Actors via DeepSeek |

|---|---|---|

Legal Reach | Full authority to access and act on your data, including prosecution | No legal authority to compel or prosecute you |

Actionable Outcomes | Jail time, fines, or other penalties | None—no jurisdiction over you |

Centralized Target | Yes—user data aggregated in OpenAI’s servers | No—data stays on individual devices |

Scale of Exploits | Massive—centralized surveillance | Localized—limited to individual devices |

Environmental and Infrastructure Impacts—The True Cost of AI

DeepSeek’s Sustainable Model

DeepSeek leverages repurposed cryptocurrency GPUs, reducing e-waste and potentially capturing otherwise "wasted" energy stranded in “energy deserts.” This lightweight approach proves AI doesn’t need massive, power-hungry data centers to deliver results.

OpenAI’s Carbon Footprint

OpenAI’s reliance on NVIDIA GPUs in centralized data centers contributes significantly to carbon emissions. The energy demands of cooling alone make this infrastructure environmentally unsustainable.

Why DeepSeek Threatens the Tech Bro Oligarchy

DeepSeek-V3’s open-source, decentralized model directly challenges Big Tech’s monopolistic grip on AI. By empowering users to control their data and access cutting-edge tools for free, it disrupts revenue models based on surveillance capitalism and subscription fees.

This challenge comes at a particularly sensitive time: Sam Altman is attempting to shift OpenAI from its original non-profit stance to a publicly traded company, and the federal government has pledged $500 billion for Project Stargate—a massive AI initiative.

Meanwhile, DeepSeek beats OpenAI across nearly every parameter, achieving better performance with outdated equipment at 99% less cost. This contrast threatens the oligarchy’s hold on AI innovation and their profits, making DeepSeek the true disruptor in the space.

Shifting Power Dynamics

Big Tech thrives on centralized control, locking users into ecosystems they control. DeepSeek hands that power back to users, proving AI can exist without corporate gatekeeping.

Democratizing Innovation

DeepSeek removes barriers to entry, enabling developers, educators, and hobbyists to innovate without asking for permission. This threatens Big Tech’s carefully guarded dominance.

Reframing the National Security Argument

DeepSeek isn’t a national security risk—it’s a threat to the oligarchy. The narrative that open-source platforms are dangerous distracts from the real issue: Big Tech’s profit-driven control over technology.

Who’s Really Afraid of Competition?

The phrase "national security threat" gets thrown around often, but more often than not, it’s a thinly veiled excuse to protect corporate profits. The U.S. has a long history of banning what it can’t compete with, and the AI space is no different. DeepSeek’s rise isn’t just a challenge to the oligarchy—it’s a wake-up call about the misuse of “national security” as a smokescreen for monopolistic practices.

The "National Security" Playbook

Take Huawei phones or BYD cars as examples. These products consistently outperform their U.S. counterparts in price, quality, and innovation. Rather than improve domestic alternatives, the U.S. declared them “security risks” and banned them outright. This same script is being flipped against DeepSeek.

Economic Protection, Not Security

By labeling competitors as threats, Big Tech and their government allies sideline open competition. This isn’t about safeguarding users—it’s about shielding billion-dollar revenue streams.

Who benefits? The same corporations that dominate today’s AI industry—locking consumers into overpriced, closed-source systems and reaping profits from data harvesting.

How Propaganda Feeds the Fear Machine

Behind every “threat” narrative lies a well-funded propaganda campaign. Billions are spent portraying foreign competitors as villains, reinforcing the idea that non-U.S. platforms are inherently untrustworthy.

But here’s the truth:

Chinese products labeled as risks often have little to no evidence of actual misuse.

The “national security” tag conveniently distracts from domestic failures, like stagnant innovation or overpriced products.

If you look at it from a cybersecurity lens, it's quite clear which one is the bigger threat.

DeepSeek-V3, despite being open-source and decentralized, faces similar propaganda tactics. The oligarchy doesn’t fear espionage—they fear losing control.

DeepSeek’s Transparency Is the Real Shield

Ironically, DeepSeek’s transparency makes it inherently more secure than the closed systems used by companies like OpenAI. Open-source platforms undergo constant scrutiny from a global community, making it nearly impossible to insert malicious code undetected.

This is why the “national security” argument against DeepSeek doesn’t hold water.

The real threat it poses is to the oligarchs, not to the public.

The DeepSeek Data Breach: Why It Had Nothing to Do with the AI Model Itself

When Wiz dropped the news about a data breach involving DeepSeek, the internet did what it always does—panicked. People immediately assumed that DeepSeek’s AI model had been compromised, fueling fears that the platform itself was insecure or even malicious. But here’s the reality:

The breach had nothing to do with DeepSeek’s AI model.

So what actually happened? It wasn’t a failure of the AI, but rather a misconfiguration in the cloud storage setup—a classic case of human error rather than some deep, systemic flaw in the model itself.

Breaking It Down: The Real Cause of the Breach

According to Wiz’s findings, the issue stemmed from inadequate access controls in DeepSeek’s cloud environment, which left sensitive information exposed. This isn’t an AI problem; it’s a basic cybersecurity misstep—the kind we’ve seen happen to plenty of major tech companies.

To put it simply:

The AI model wasn’t "hacked." No one manipulated DeepSeek’s underlying algorithms or training data.

No backdoors were discovered in the AI itself. There was no evidence that DeepSeek’s model was designed to secretly siphon user data.

It was a cloud security issue. Sensitive files were left exposed due to a configuration error, making them accessible when they shouldn’t have been.

Why This Matters

This distinction is important because a cloud storage misconfiguration is fixable—it’s a common, well-documented security risk that companies can (and should) address with better cybersecurity practices. On the other hand, if the AI model itself had been compromised, that would suggest a fundamental flaw in DeepSeek’s design or intent, which would be a much bigger deal.

While this breach raises valid concerns about how DeepSeek handles security, it doesn’t mean the AI model itself is insecure or compromised. Instead, it highlights the ongoing challenge of proper cloud security management—something even the biggest tech companies struggle with.

So, is this a wake-up call for DeepSeek? Absolutely. But is it proof that the AI model itself is dangerous? Not at all.

Reclaiming AI for the People

The rise of platforms like DeepSeek gives us a rare opportunity to reshape the future of AI. It’s not just about adopting a new tool—it’s about rejecting the closed-source technocracy and reclaiming technology as a force for public good.

Choose Open Source: Break Free from Monopolies

DeepSeek-V3 is proof that advanced AI can exist without centralized control or exorbitant fees. By embracing open-source platforms, users gain:

Transparency: Open code means open accountability.

Privacy: Local-first design eliminates data harvesting.

Accessibility: Free tools empower everyone, not just those who can afford subscriptions.

Every click on an open-source platform is a step toward democratizing technology.

Demand Transparency: Hold Big Tech Accountable

Closed-source systems thrive on user ignorance. They collect your data, lock you into their systems, and build billion-dollar empires without answering to anyone.

Ask questions: What happens to your data? Why aren’t these platforms open-source?

Push for accountability: Demand that corporations like OpenAI open their code and adopt transparent practices.

Transparency isn’t optional—it’s a necessity for ethical AI.

Support Decentralization: Take Back Control

The centralized model of AI puts too much power in the hands of a few corporations. DeepSeek’s decentralized approach flips the script, empowering users to:

Keep control of their data.

Avoid costly subscriptions.

Operate without corporate gatekeeping.

Supporting decentralized platforms isn’t just about privacy—it’s about shifting the balance of power away from Big Tech and toward the public.

DeepSeek-V3 Is the Future of Ethical AI

DeepSeek isn’t a threat to national security—it’s a threat to the tech bro oligarchy. By prioritizing transparency, privacy, and sustainability, it proves that AI doesn’t have to be controlled by a handful of billion-dollar corporations.

The true risk lies in allowing platforms like OpenAI to dominate unchecked. Their centralized, closed-source models aren’t just a danger to privacy—they’re a danger to innovation and user empowerment.

This is our chance to reclaim AI as a tool for the many, not the few. By supporting platforms like DeepSeek, we can build a future where technology serves people—not profits.

The choice is clear: continue down the path of surveillance capitalism and corporate control, or embrace a decentralized, ethical, and transparent alternative.

The future of AI is in your hands—which model will you choose?

Stay Curious,

Addie LaMarr